Pulse

Heart Rate Detection

RUN

A person’s heart rate is an important physiological parameter that can be strongly indicative of said person’s physiological state. For instance, heart rate is known to correlate strongly with feelings of stress and anxiety—which can be of interest to researchers seeking to understand the physiological state of a given subject. However, more traditional methods of heart rate determination often require physical measures that are not easily scalable and tend to render subjects conscious of measurement. Facial heart rate determination is a relatively recent approach to assess a subject’s heart rate with relatively low cost and at scale due to the convenience and high availability of webcams and computers.

The multimodal toolkit contains an implementation of a heart rate determination application. This application uses the Eulerian Video Magnification (EVM) approach in order to assess a subject’s heart rate through minute tonal changes in the subject’s face. The application takes in a video sequence as an input and subsequently decomposes and filters through each frame before amplifying imperceptible pulse signals. These amplified signals are then used to model the flow of blood entering and exiting the subject’s face and, in turn, used to infer the subject’s heart rate.

Background

Human skin color varies slightly with blood circulation due to the inflow and outflow of blood in the face. The flow of blood, therefore, generates signals that can be used to infer a person’s heart rate—these signals, however, are imperceptible to the naked eye. However, with improvements in webcam and computer vision technology, these signals can effectively be captured and magnified to accurately determine a person’s heart rate in real-time simply through their webcam by applying spatial and temporal processing of the input video from the webcam.

Process

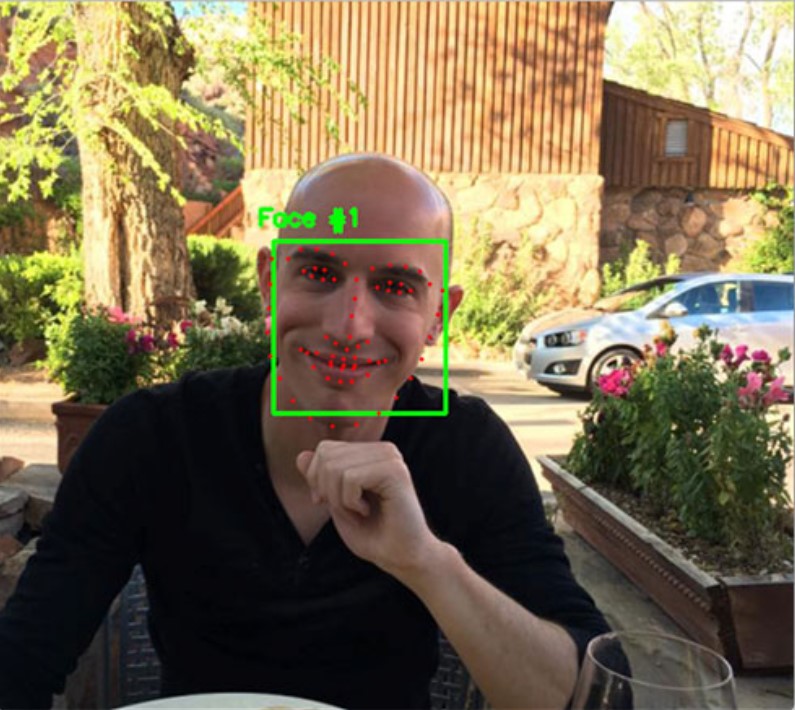

The tool first employs opencv-python’s Haar cascade classifier on the video input which relies on the popular Viola-Jones algorithm for face detection. Briefly, this is a machine learning approach where a cascade of weak classifiers are trained from many positive and negative image data of faces. These trained classifiers are then used to identify facial features as landmarks in the video input so as to identify if a face is present in the video and, if so, where precisely is the face based on these landmarks.

Fig 1: Facial detection with OpenCV-python and dlib (Source: pyimagesearch, 2017)

Once the facial detection is complete, the application can effectively crop out all other video and focus on comparing how the skin tone of the subject’s face fluctuates across frames; in other words, the subject will be able to move their head around and the application will still be able to identify their heart rate.

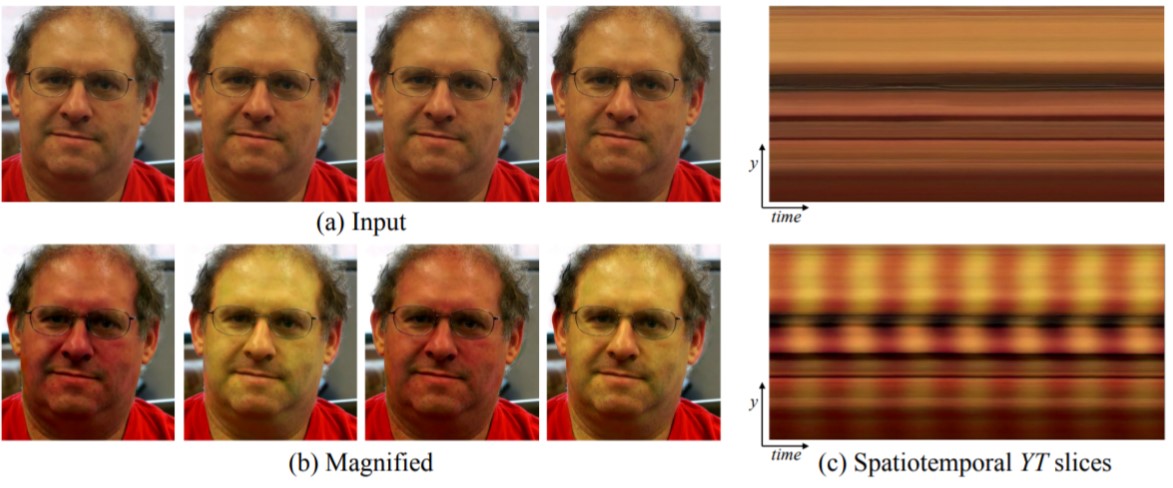

Fig 2: Eulerian Video Magnification on facial video (Source: MIT CSAIL, 2012)

Next, the application applies the EVM framework to visualize heart rate. In Figure 2(a), the application takes in four frames from the original video sequence and isolates the subject’s face such that the scope of the subsequent analysis can be restricted to facial skin tone.

Based on these four frames, the subject’s pulse signal is magnified in Figure 2(b) by selecting and amplifying a band of temporal frequencies that includes plausible human heart rates. This amplification can be understood as exaggerating the variation in color, as per in Figure 2(b), in accordance with identified temporal frequencies. This, then, emphasises the variation of redness as blood flows through the user’s face.

Subsequently, temporal filtering is applied to enable subtle input signals to be identified in spite of any noise in the video input; this also reveals low-amplitude motion. The spatiotemporal visualizations of the input video (top) and the output video (bottom) are shown in Figure 2(c); they represent a vertical scan line from the input and output videos plotted over time to illustrate how periodic color variation is amplified.

Finally, by considering the brightness constancy, the application is able to make predictions of the user’s heart rate.

References

- https://www.pyimagesearch.com/2017/04/03/facial-landmarks-dlib-opencv-python/

- http://people.csail.mit.edu/mrub/papers/vidmag.pdf

- https://docs.opencv.org/3.4/db/d28/tutorial_cascade_classifier.html

- https://github.com/habom2310/Heart-rate-measurement-using-camera

- http://people.csail.mit.edu/mrub/evm/

- POH, M.-Z., MCDUFF, D. J., AND PICARD, R. W. 2010. Non-contact, automated cardiac pulse measurements using video imaging and blind source separation. Opt. Express 18, 10, 10762–10774.

- PHILIPS, 2011. Philips Vitals Signs Camera.

http://www.vitalsignscamera.com/ - VERKRUYSSE, W., SVAASAND, L. O., AND NELSON, J. S. 2008. Remote plethysmographic imaging using ambient light. Opt. Express 16, 26, 21434–21445.