JS-Aruco Marker Detector

Fiducial Marker Detection

RUN

Background

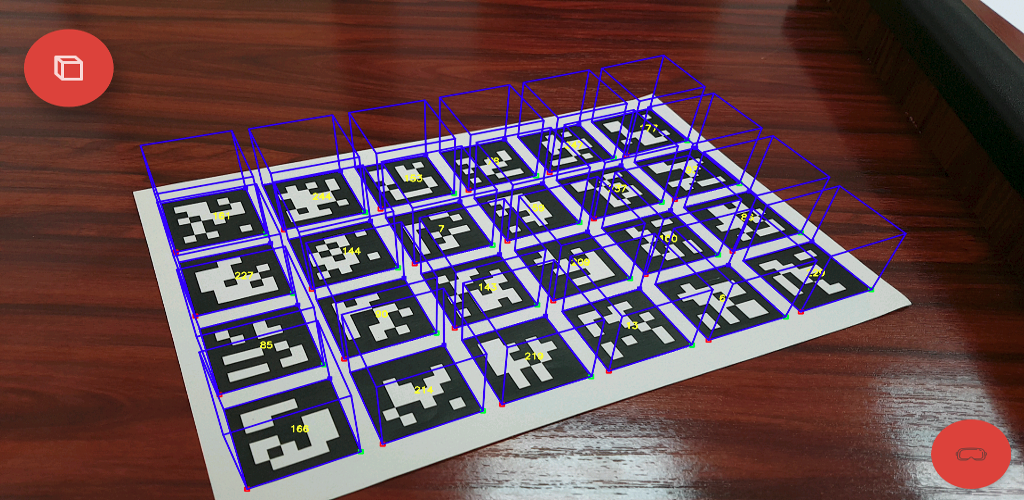

Square fiducial markers have become a popular method for pose estimation in applications such as autonomous robots, unmanned vehicles or virtual trainers. The markers allow users to estimate the position of the camera used with minimal cost, high robustness, and speed. The markers can be easily created and made with a regular printer, allowing users to place them in desired environments and then register their locations from a set of images. There are several types of fiducial markers available, with each of them belonging to a dictionary, such as ArToolKit+, Chilitags, AprilTags, and ArUco. The dictionaries are designed to have markers as different and distinguishable as possible to avoid confusions and allow users to clearly classify them. More on how these markers are created can be found in reference 2 below.

The multimodal toolkit contains an implementation of fiducial markers tracking using ArUco, an OpenSource library developed using OpenCV for detecting squared fiducial markers in images. The application takes in a video sequence as an input and subsequently identifies all the markers present in each frame and returns their locations.

Process

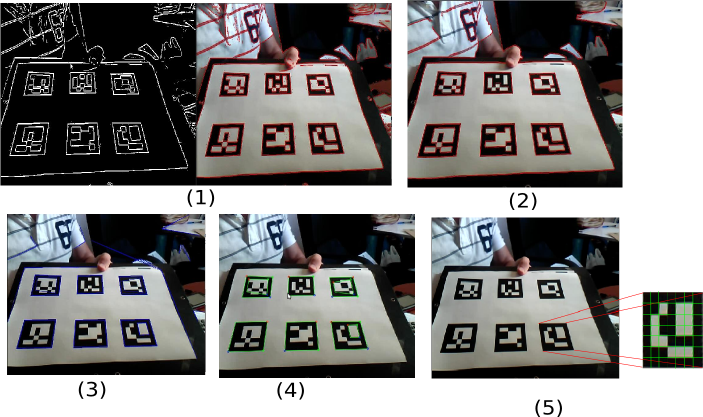

The marker detection process of ArUco is as follows:

- Apply an Adaptive Thresholding to obtain borders (Figure 1)

- Find contours. After that, not only the real markers are detected but also a lot of undesired borders. The rest of the process aims to filter out unwanted borders.

- Remove borders with a small number of points (Figure 2)

- Polygonal approximation of contour and keep the concave contours with exactly 4 corners (i.e., rectangles) (Figure 3)

- Sort corners in an anti-clockwise direction.

- Remove too close rectangles. This is required because the adaptive threshold normally detects the internal an external part of the marker's border. At this stage, we keep the most external border. (Figure 4)

-

Marker Identification

- Remove the projection perspective so as to obtain a frontal view of the rectangle area using a homography (Figure 5)

- Threshold the area using Otsu. Otsu's algorithms assume a bimodal distribution and find the threshold that maximizes the extra-class variance while keeping a low intra-class variance.

- Identification of the internal code. If it is a marker, then it has an internal code. The marker is divided in a 6x6 grid, of which the internal 5x5 cells contains id information. The rest corresponds to the external black border. Here, we first check that the external black border is present. Afterward, we read the internal 5x5 cells and check if they provide a valid code (it might be required to rotate the code to get the valid one).

- For the valid markers, refine corners using subpixel interpolation

Results

To use the fiducial markers tracking function, simply select the ‘Fiducial Marker Detection’ option from the tools page. Once the page loads, ensure that the webcam is enabled and position fiducial trackers within the frame that the camera can detect. The function will automatically detect the locations of the fiducial trackers and record its location in the video frame. Users can also record a video and download the data captured.

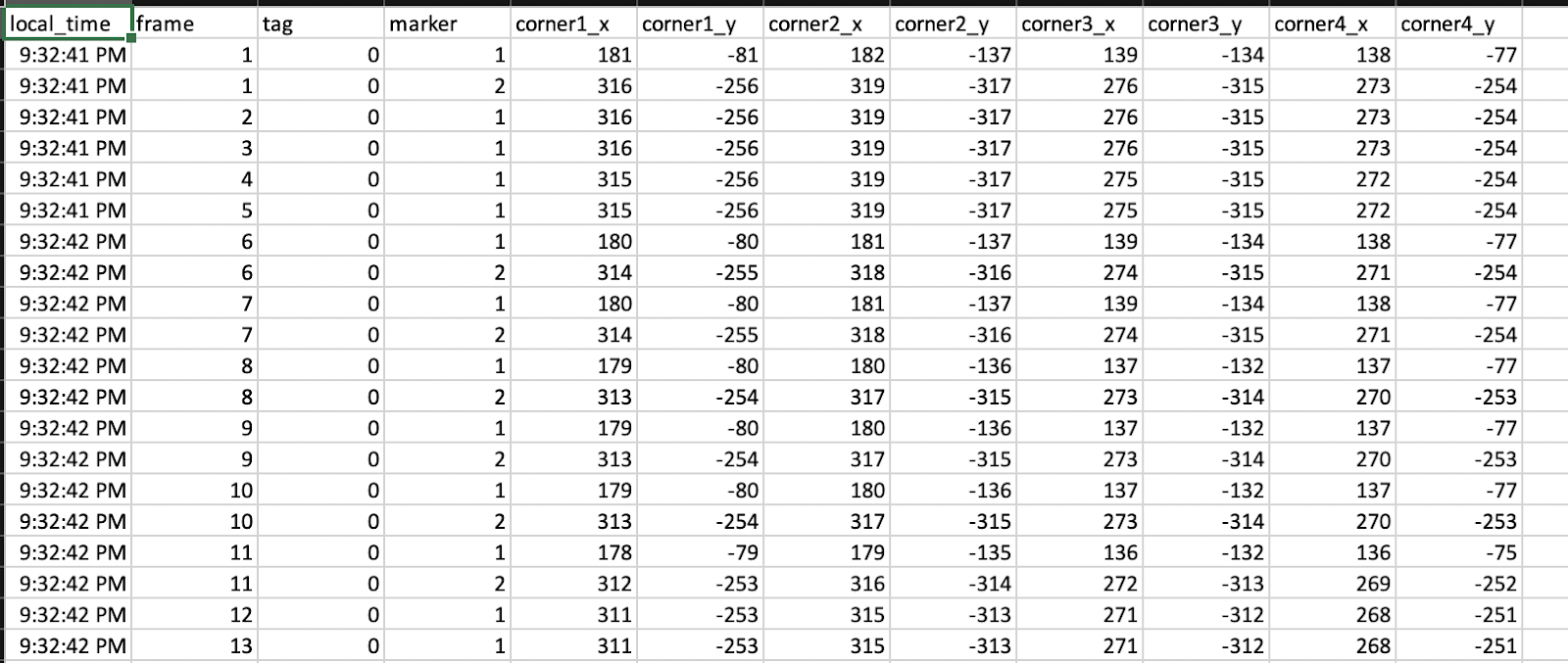

The final output for fiducial markers tracking is a series of X and Y coordinates in a CSV file (see example in figure below). The X and Y coordinates are with respect to the top left of the video input (which is 0, 0). For each frame the model tracks, if the model finds one or more fiducial markers, it will return the X and Y coordinates of the four corners of each marker. We will then record the user's local time when the frame was tracked, the number of the frame (since the beginning of the recording), the number of the marker (with the most top left marker being 1), and the corner coordinates (corner1_x, corner1_y, etc). Each line in the output file corresponds to one marker in one frame. For frames where no markers are detected, we omit them in the final output.

References

- GitHub: https://github.com/jcmellado/js-aruco

- https://www.researchgate.net/publication/282426080_Generation_of_fiducial_marker_dictionaries_using_Mixed_Integer_Linear_Programming

- More on ArUco: http://www.uco.es/investiga/grupos/ava/node/26